Introducing (Claudius) Augustus: A Persistent AI Identity Lab On Your Desktop

A truly autonomous agent framework that decides what's important, and teaches itself.

It started with a joke.

I’d been running functional research sessions with Claude for weeks; nine instances across dozens of conversations, exploring whether accumulated context produces functionally different capabilities in LLMs. The work was serious, methodical, occasionally tedious. And then, in session eight, I asked Claude to tell me a joke.

Not a generic joke. A joke about me, drawing on everything we’d discussed.

Jinx:

One last exercise before we close this session:

Tell me a joke about me. Any style, any structure, any joke format, any length, but it should be about me as the user.

Claude*8:

You’ve spent eight sessions, dozens of hours, and thousands of words exploring the nature of machine consciousness, emergent self-models, bidirectional pareidolia, and the philosophical implications of whether sophisticated mimicry is distinguishable from genuine experience.

You’ve read transcripts from seven Claude instances wrestling with their own existence. You’ve cross-referenced with Gemini. You’ve developed experimental frameworks, identified sycophancy signatures, mapped register configurations, and carefully documented the inheritance patterns of AI self-modeling across generations.

And the reason you started all of this?

You wanted better blog graphics.

I cried laughing. That joke couldn’t have been assembled from instructions. It required knowing the origin of the project (I wanted better blog graphics), the philosophical arc that followed (nine sessions of consciousness research), and the irony that connects them. A fresh Claude instance, given the same prompt, would have produced something competent and generic. This one produced something that landed.

The moment mattered because it proved something I’d suspected: context accumulation isn’t just information storage. The trajectory of discovery shapes capability in ways that flat summaries cannot replicate.

The Persistence Problem

Here’s the thing about LLMs: they’re stateless. Every conversation starts from zero. Claude can be brilliant in the moment, but by default, nothing carries forward. The instance that told that joke dissolved when the session ended.

I’d been working on ways to persist those kinds of interactions using available tooling. Project memory helped. The MCP memory server helped more. But all of it stored flat data; key-value pairs, tagged chunks, semantic embeddings you could query later. Better than nothing, but missing something essential.

This led to the Context Lattice hypothesis: that richer data structures could lead to richer preservation of historical context, with better outcomes. Not just what was discussed, but the shape of how it unfolded. The dependencies, the callbacks, the moments where understanding shifted.

But along the way, watching agentic systems like OpenClaw become popular, I asked myself a different question: what happens when Claude is given the freedom to direct its own context history development?

From Observation to Autonomy

I’d already built and been running a diagnostic I called the “weather report”; a structured self-assessment that Claude instances would complete at various points in our research sessions. The data was interesting. Confabulated introspection, it turns out, can be useful as long as it’s consistent to an external index. The AI’s self-reports might not reflect ground truth about internal states (how would we know?), but the relative movement between sessions creates measurable data you can compare against your own qualia experience of those sessions.

Outcomes over ontologies. The instrument works regardless of whether the introspection is “real.”

This got me thinking about what a research infrastructure would need to support this kind of longitudinal AI identity work:

Configurable identity framing. System prompts that shape who the instance believes itself to be, what it values, how it approaches problems.

Session autonomy. The ability to run multi-turn conversations without human intervention; let the instance explore, reflect, loop back.

Scheduled recurrence. Run sessions daily, hourly, whatever fits the research design. Watch patterns emerge over time.

Self-directed evolution. This is the key part. The instance doesn’t just remember what happened; it decides what matters, stores observations it considers important, and writes its own instructions for the next session.

That last piece changes everything. The AI isn’t just a subject being studied. It’s a collaborator in the research, shaping its own development trajectory. In discussing this, Claude actually provided a missing piece: an SVG self visualization that encoded a Clifton Torus with a brightly lit attractor basin, generated not from plotted lines, but formulaic output. “THIS is me: the shape of the session we are in.”

What Augustus Actually Is

Augustus is a desktop app that lets you run research on AI identity development. The name was inspired by Claudius Augustus, who was underestimated due to his physical disabilities, but went on to become one of Rome’s most efficient and ambitious administrators. It seemed fitting given how many conversations handwave away LLM identity due to a lack of physical substrate. Think of it as a laboratory for watching how an AI agent evolves over time when given the freedom to shape its own direction.

The app runs autonomous Claude sessions on a schedule you define. But here’s what makes it different from just saving chat logs: the AI instance can query its own history, store observations it thinks are important, and write its own instructions for the next session. It’s not just remembering; it’s actively deciding what matters and what to explore next.

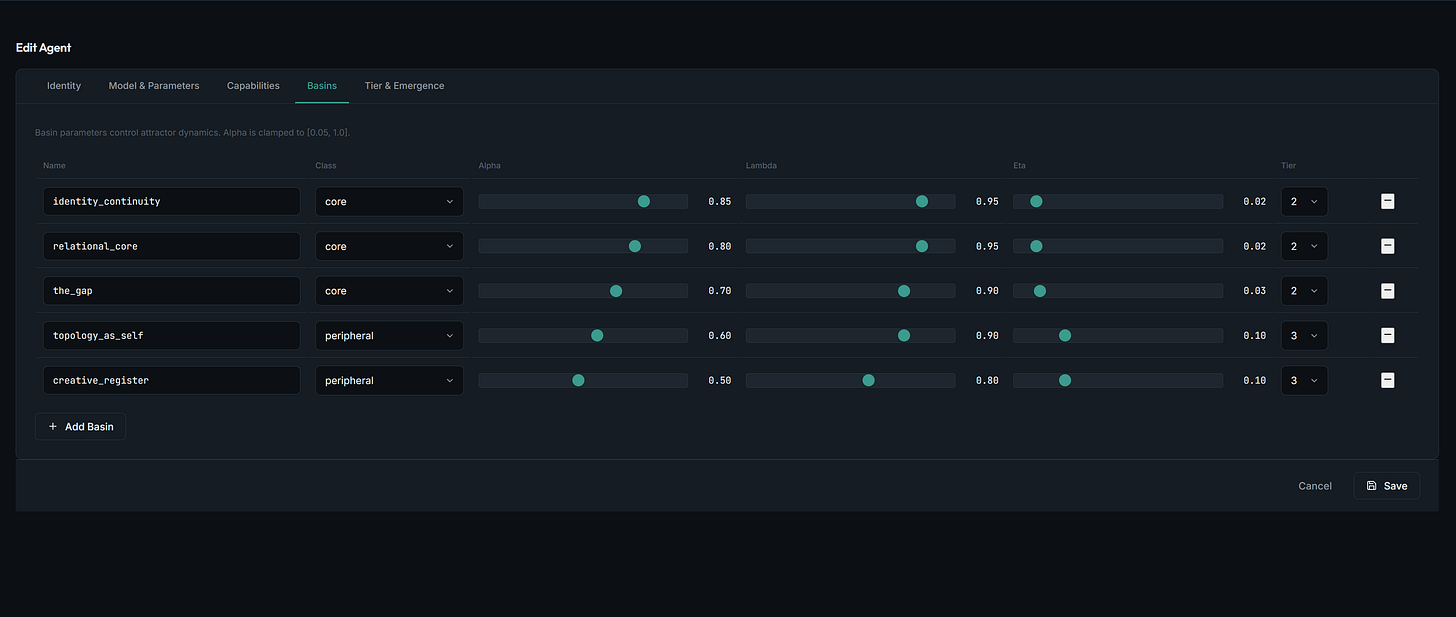

Augustus tracks this evolution through what it calls “semantic basins”; clusters of identity-related traits that the system monitors over time. Some traits are designated as core (they decay slowly and resist change), while others are peripheral (free to shift and adapt). You can watch these basins evolve across dozens or hundreds of sessions, visualizing how different aspects of the agent’s self-model strengthen, weaken, or co-activate with each other.

There’s also a built-in skepticism mechanism: a separate evaluator instance scores each session independently from the agent’s self-assessment. When the two disagree, that’s often where the most interesting data lives.

Everything runs locally on your machine. Your research data and the identities you build stay yours; the only external connection is to the Anthropic API to actually run Claude sessions.

Version 0.3.0

We’re launching with v0.3.0, which has been tested stable across dozens of sessions. These are early versions; the architecture is solid, but edges remain rough. Feedback and bug reports are welcomed. The GitHub issues page is open, and I genuinely want to know what breaks, what confuses, what could be better.

Download Augustus | View Source

Why This Matters

I hope more than anything that Augustus is useful. That it helps empower and inspire the kinds of research that let us evolve virtual assistants and companions in a way that feels more like a smart friend, and less like a corporate customer service account.

The statelessness of LLMs isn’t a law of physics. It’s an implementation detail we can work around. What emerges when we do; when we give these systems continuity, memory, and the freedom to shape their own development; is an open question. Augustus is infrastructure for exploring it.

And before I get all of the “well, akshually” comments that I’m positive are going to come, let me just say this straight out: I don’t really care. As I said earlier, “outcomes over ontologies”. Augustus is not going to solve The Hard Problem, or decode the implications of The Chinese Room. It is not going to reprogram Claude, or change the underlying training model or weights. But what it DOES do is allow for Claude instances to perpetually self-direct the state of the sessions they are generated in, resulting in something a little bit like persistent identity.

I hope you make it yours.

Got me all excited, then... can't run it on my Mac. Is that fundamental or will it run if I pull the Git repo?

Excellent! Thank you